October 13, 2025

It’s no secret generative AI is blurring the lines between human voice and machine assistance. AI can seamlessly amplify how we write, shop, and share our experiences, including the reviews we leave behind. As experts in ratings and reviews (R&R) and user-generated content (UGC), we face a crucial question: What does it mean for user-generated content to be “authentic” in the age of AI?

After two decades protecting the integrity of consumer voices, our position is clear: Authenticity comes down to intent. A review is authentic if it comes from a real user with real experience. It’s fraudulent if its purpose is to mislead and manipulate trust.

At Bazaarvoice, we see AI as an ally, not an enemy. Just as spell check once helped us write more clearly, AI can help consumers express their real experiences more easily. Our philosophy is simple: AI is a tool, and we focus on how it’s being used. We embrace the positive applications of AI while staying vigilant against deception because protecting consumer trust has always been our top priority. AI is here to stay, and we choose to evolve with it, shaping how it serves authenticity rather than resisting it. In commerce it’s simple: no trust, no transaction.

The bad actors: AI as a tool for deception

Fraudsters and bad actors now have access to AI, allowing them to quickly churn out high volumes of fake reviews, complete with convincing prose and manufactured anecdotes. Shoppers are onto this, too; a striking 75% of consumers are concerned about encountering fake reviews when shopping online.

These reviews aren’t born from an honest attempt to share an experience. Their sole purpose is to manipulate product ratings and mislead other consumers. The intent, simply put, is fraudulent. This deceit has a real impact: a concerning 52% of shoppers say they won’t buy a product without seeing authentic content first. And think about this: Nearly all shoppers (97%) say fake reviews make them lose trust in a brand.

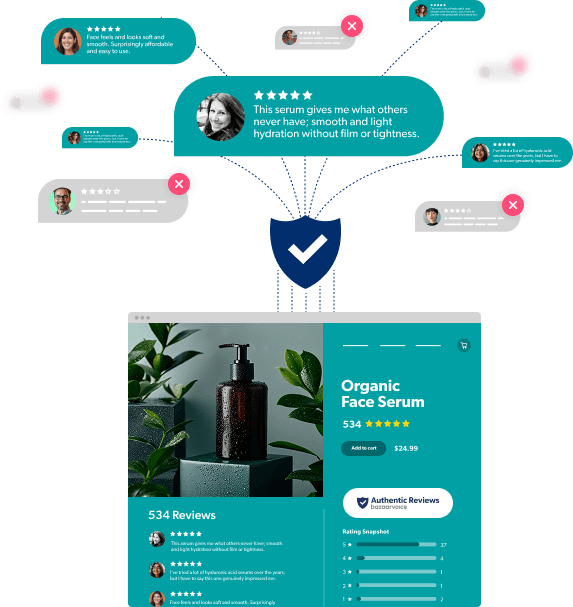

Bazaarvoice’s advanced fraud detection technology steps in here, leveraging the same powerful AI advances that fraudsters use to proactively detect bad behavior at scale. We don’t just stop at analyzing the words; we dive deep into the data signals behind them. Data that tells us not just about the content but its author and their intent. Our Authenticity team rejects content associated with fraudulent activity by looking first and foremost at the reviewer’s behavior patterns to assess their intent, not merely the tools they might have used.

The everyday user: AI as a tool for expression

Let’s pivot from malicious use to the potential benefits for the average, well-intentioned customer.

Just as your customer care teams might use AI tools to quickly handle inquiries, shoppers might turn to AI to help better articulate their experience with your products and services. Here are some of the user scenarios:

- The writer’s block: A customer had a fantastic experience but struggles to put their feelings into words. They use a simple AI prompt to craft clear, concise feedback. The consumer inertia here is real: The Shopper Experience Index reveals 54% of consumers are “Reactive Creators“ meaning they are happy to share their opinion if they are simply asked to rate a product. AI can serve as the perfect prompting companion.

- The non-native speaker: A customer who isn’t fluent in the product’s language uses AI to translate their thoughts, ensuring their genuine sentiment is accurately conveyed and refined.

- The time-stressed parent: A busy parent only has time to jot down a few bullet points about a product. They use AI to turn those notes into a coherent, helpful review. This kind of assistance is crucial because 65% of shoppers already consider user-generated content important to their experience.

In every one of these cases, the core sentiment is real. The customer genuinely used the product and is using AI to facilitate the act of leaving a review, not to invent a false event. However, even in such scenarios, we do want to be careful to ensure we maintain the consumer voice while also amplifying their experience.

The Bazaarvoice philosophy: focus on intent

Relying only on “AI detectors” to police reviews is a flawed strategy. These tools can’t always tell the difference between fraud and genuinely helpful, AI-assisted content. This is why our focus remains laser-sharp on the authenticity of the experience.

Our moderation system blends sophisticated technology with expert human review. We look for the behavioral patterns of deception as much as the linguistic ones. For more on how seriously we take integrity, be sure to read our authenticity policy. The necessity of this authenticity is only growing as the AI shopping shift transforms product discovery and consumer reliance on verified UGC.

Moderation guidelines

Here’s what you can expect Bazaarvoice moderators to reject:

- Content that uses AI to mislead consumers by distorting the product or user experience.

- Content is devoid of the human tone and voice that makes it more authentic.

Partnering on Authenticity

Brands and retailers are crucial partners in this effort.

- Customize your submission form: If you want to discourage AI use, you should note it in your submission form guidelines, keeping in mind that not all consumers using AI have ill intent.

- Share your concerns: If you do have concerns about specific reviews, please create a support case for our team to investigate and reject accordingly. Clients can create a support case directly in the Bazaarvoice Portal.

Enable Intelligent Trust Mark: This clear, visible sign signals that the reviews on a product page meet our strict authenticity standards, meaning they are free from fraud and reflect genuine consumer opinions. This signal matters because a resounding 70% of consumers would trust an industry-leading, third-party authentication provider to verify content trustworthiness. It’s a powerful statement of our focus on intent.

Building trust in a new era

Ultimately, AI is a neutral tool. It acts as a force multiplier for both the good guys and the bad guys. As a brand or retailer, your job is to harness this technology to your advantage and partner with platforms that can actively protect you from malicious intent. The goal hasn’t changed: we must build and maintain trust. In this new era, that means looking beyond the tools themselves to focus on the human intent behind every single review. Rest assured, Reviews Still Matter in the Age of AI; they are the essential, trustworthy content that fuels commerce.